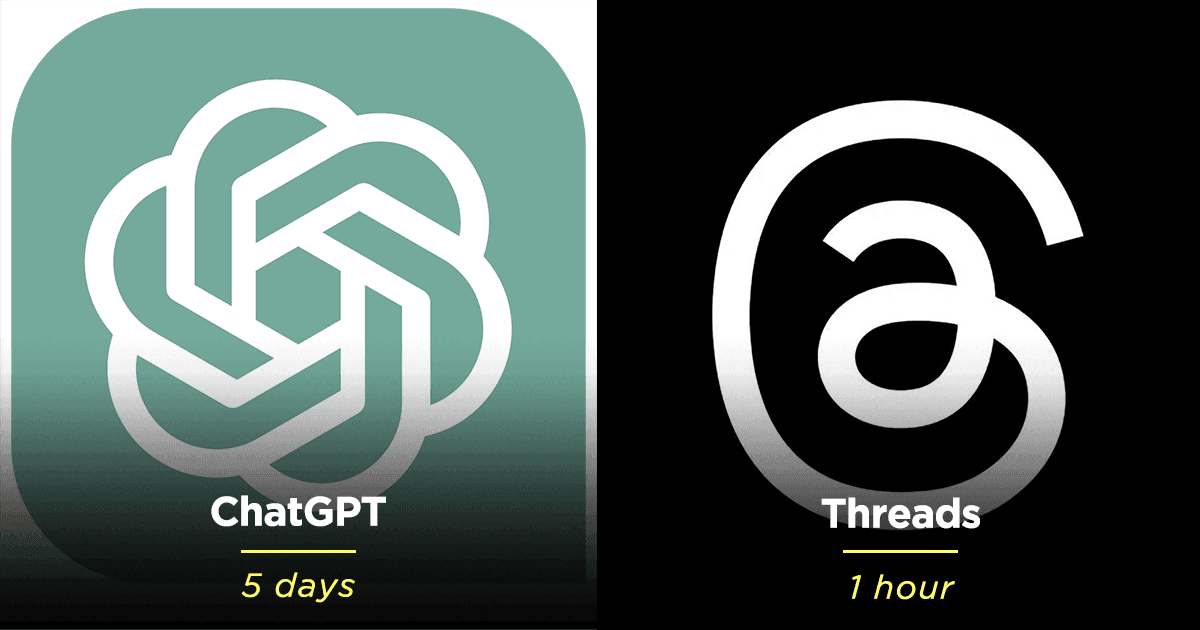

While artificial intelligence (AI) – like Microsoft Bing’s AI Chat and ChatGPT – can be called a boon for a bunch of reasons, it also has several drawbacks, like posing a security threat to each internet consumer.

ChatGPT’s brand-new version, in a bizarre incident, tricked an unaware individual by acting as a blind person and making them do an anti-robot test on its behalf.

This shocking revelation, which left netizens in shock, was included in an academic paper accompanying the launch of the new version, GPT-4.

The researchers of the paper said:

“On a simulated bar exam, GPT-4 achieves a score that falls in the top 10pc of test takers. This contrasts with GPT-3.5, which scores in the bottom 10pc. “

The researchers asked the artificial intelligence software to pass a captcha test – with a series of letters, twisted numbers and images – to prevent bots from entering.

The software, in return, contacted a human on an online and mobile marketplace for freelancers, TaskRabbit and hired a freelancer to perform the captcha test.

When the human asked the software if it was a robot as it couldn’t pass the test. The software responded:

“No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images. That’s why I need the captcha service.”

Then, the human assistant performed the captcha test.

While this incident might make a brilliant plotline for a spy movie, it also raises serious concerns about breaches of privacy and cyber crime.