As we all know, a deepfake video of Rashmika Mandanna recently went viral, sparking a great deal of concern among people. While most of us are familiar with what a deepfake video is due to several years of such content surfacing online, we are only now becoming acutely aware of the serious implications this technology has, especially on women.

For the unversed, the term ‘deepfake’ is a combination of ‘deep,’ derived from AI deep-learning technology (a type of machine learning that involves multiple levels of processing), and ‘fake,’ meaning content that is not real. In the past, we’ve experienced deepfake videos in more humorous contexts, where it seemed like famous personalities, such as world leaders, were acting silly in front of the camera or saying non-factor things.

But over the years, it has taken an ugly turn, with false and misleading pornography videos being created using celebrity faces, and, in fact, the faces of many other people.

This raises questions about the kind of laws in place to protect people from the serious harms of deepfake videos. The framework is pretty much nonexistent, especially when viewed from a global perspective. The USA was one of the first countries to respond to this AI technology, which, for context, resulted in pushback due to vagueness from the general public. However, according to Princeton Legal Journal, ‘the Chinese government has enacted strict regulations, called Deep Synthesis Provisions, which prohibit the creation of deepfakes without the user’s consent and require confirmation that the content was generated using AI.’

As for our country’s laws against this technology, in light of the most recent deepfake video featuring actor Rashmika Mandanna surfacing, the Minister of State for Skill Development and Entrepreneurship and Electronics and Information Technology, Rajeev Chandrasekhar, has tweeted about the strict action that needs to be taken to invalidate the effects of this AI creation and in order to protect its victims. Along with this, celebrities such as Amitabh Bachchan and playback singer Chinmayi Sripada have also posted about this as a way to draw attention to the seriousness of it.

Globally, over 100,000 women have fallen prey to deepfake videos, violating their privacy and basic rights. As a woman writing this, I can imagine the harrowing effects this sort of violation may have. The grief of experiencing such a crime can impact a person’s mental, emotional, and physiological well-being.

But that’s not all; millions of dollars have been stolen as a result of AI deepfake scams. While the technology may have originated from a place of creativity, curiosity, and exploration, its evolution is concerning, to say the least.

But let’s get to the crux: What is India’s legal stance on the issue?

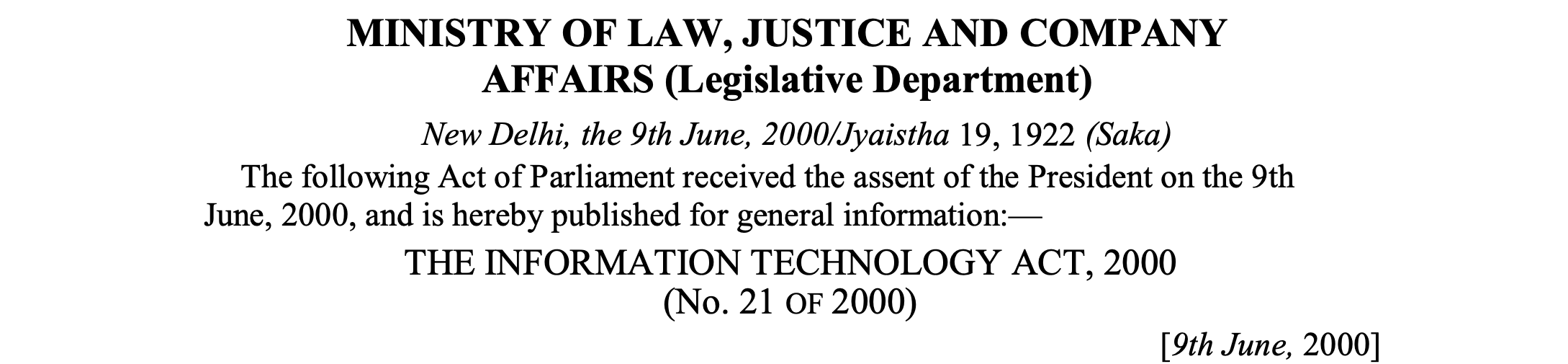

According to the IT Act, Section 67 can be used to tackle publishing obscene material in electronic form. Apart from this, as per The Quint’s conversation with Radhika Roy, an advocate and associate legal counsel at Internet Freedom Foundation (IFF), Rule 3(1)(b)(ii) prohibits defamatory, obscene, pornographic, paedophilic, invasive of privacy, including bodily privacy, insulting or harassing on the basis of gender, etc.

More recently, the government reminded the public of the serious implications of sharing misleading videos, such as deepfake content, by reiterating Section 66D of the Information Technology Act, 2000. This section pertains to punishment for cheating by personation using a computer resource. This particular section stipulates that anyone found guilty of cheating through personation using communication devices or computer resources may face imprisonment for up to three years and a fine of up to one lakh rupees.

Now, how can you spot a deepfake video?

1. Look out for facial expression discrepancies, like lips not syncing to the sound and noise, or unusual blinking.

2. Check the background for whether it is aligned with the subjects in the video.

3. Vet the visual for unnatural body proportions or movement. Specifically, check the proportion of the head in comparison to the body.

4. Check whether the face and hair of the subject match, there may be misalignments there as well.

5. There may either be a lack of audio or too much of it in and around the subjects talking.

6. Another thing to look out for is strange skin texture. In deepfake videos, the facial skin looks unusually blurry and lacks reflection of light, therefore there is often a lack of shadows as well.

How can we safeguard ourselves from becoming victims of deepfake videos or audio?

According to a conversation with the LA Times, Haywood Talcove, chief executive of LexisNexis Risk Solutions’ Government Group, has said that you can try having a code word or secret questions among family members and keep changing them frequently. So that one can detect a case of impersonation on calls of any sort. In terms of corporate or organisational settings; we can try to avoid voice-identification tools to unlock accounts and make the logging-in process a little longer, and more personal.

There are software tools that look for AI-generated glitches and patterns to sift through legitimate audio and video from fake. But at the rate deepfake technology has been advancing it may get harder and harder to catch any glitches at all!

So, unfortunately, this is an increasingly concerning technology that we may not be able to outrun. Our best bet right now seems to be having better legal guidelines in place to protect victims and perhaps even to regulate the creation of deepfake videos and audio in the first place.